|

I am interested in artificial intelligence and machine learning. Currently, I am focusing on research related to large-scale language models.

Email / Google Scholar / Github / LinkedIn |

|

|

|

|

We propose Domain-Aware Fine-Tuning (DAFT), a novel approach that incorporates batch normalization conversion and the integration of linear probing and fine-tuning. Our batch normalization conversion method effectively mitigates feature distortion by reducing modifications to the neural network during fine-tuning. Additionally, we introduce the integration of linear probing and fine-tuning to optimize the head layer with gradual adaptation of the feature extractor.

|

|

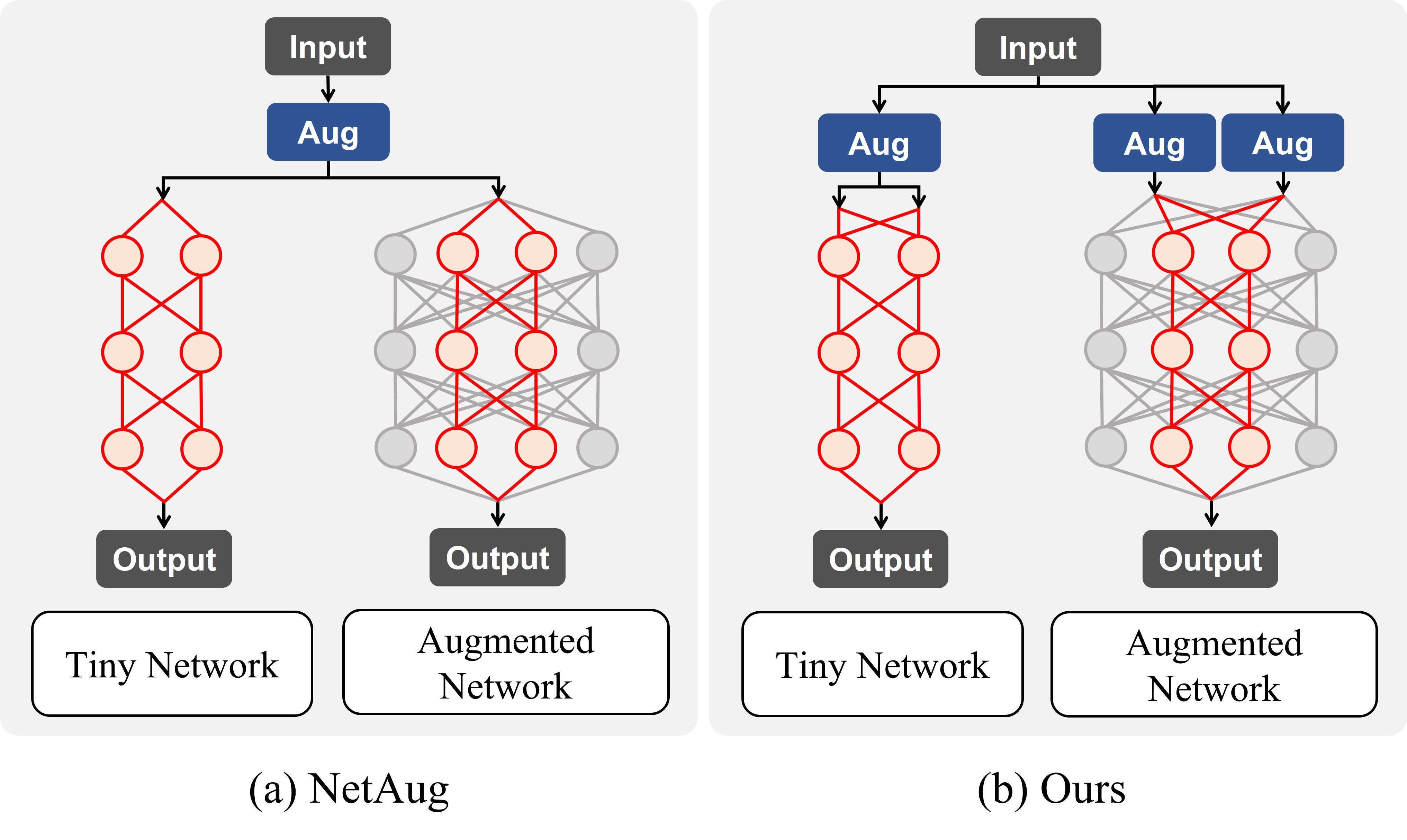

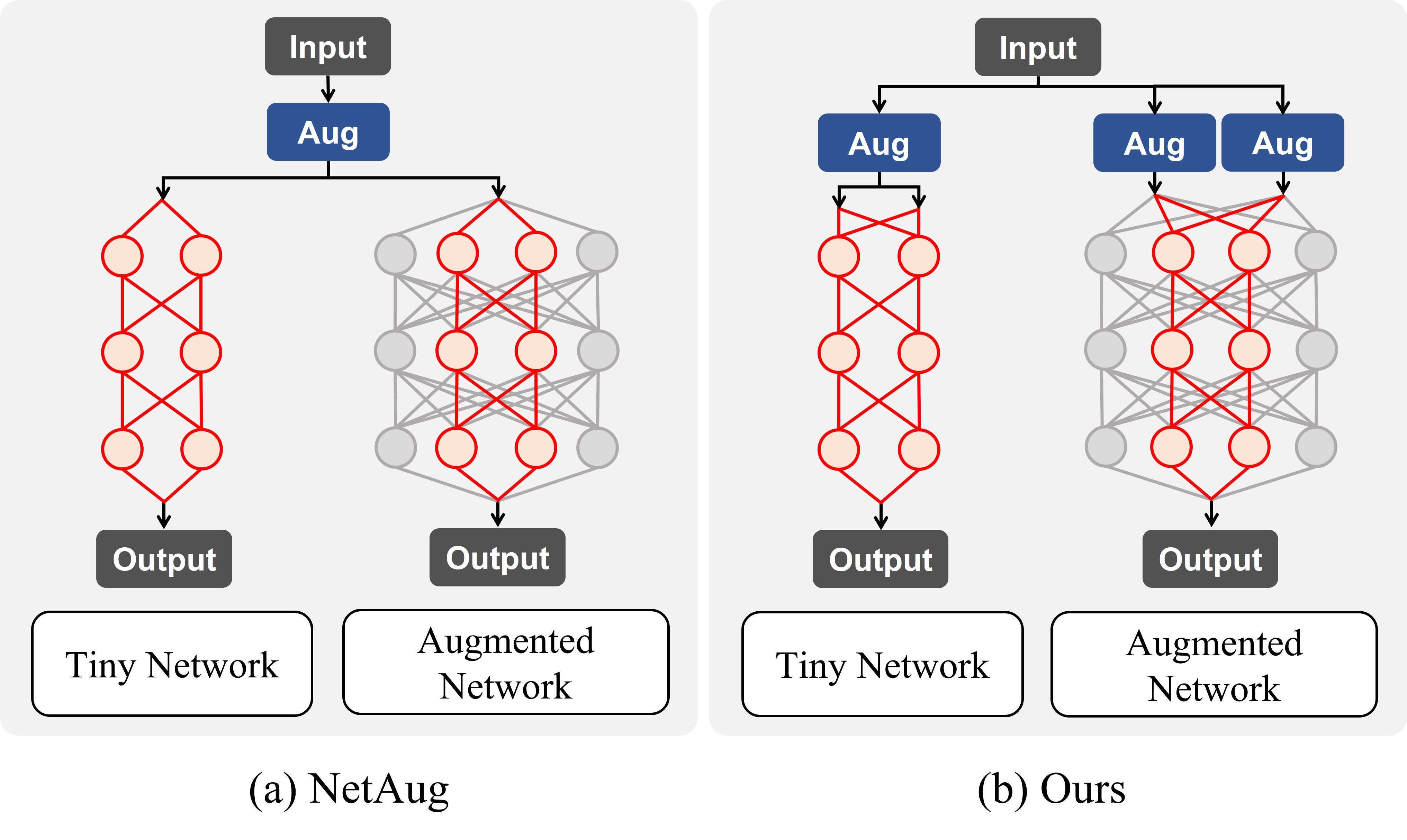

We propose a new method called Multi-Input Network Augmentation (MINA). MINA converts tiny neural networks into a multi-input configuration, allowing only the augmented model to receive more diverse inputs during training. Additionally, tiny neural network can be converted back into their original single-input configuration after training.

|

|

We propose a novel meta-ensemble learning approach based on a recent ensemble method: a multi-input multi-output (MIMO) configuration. Our approach is simply applied to existing meta-learning algorithms. Multiple subnetworks in a single model simultaneously learn multiple episodes and ensemble the predictions, leveraging the model capacity.

|

|

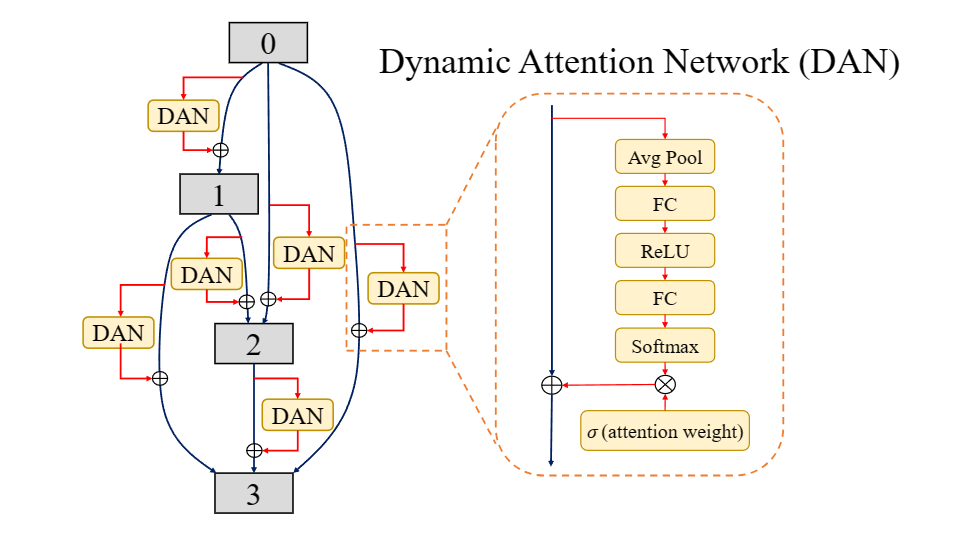

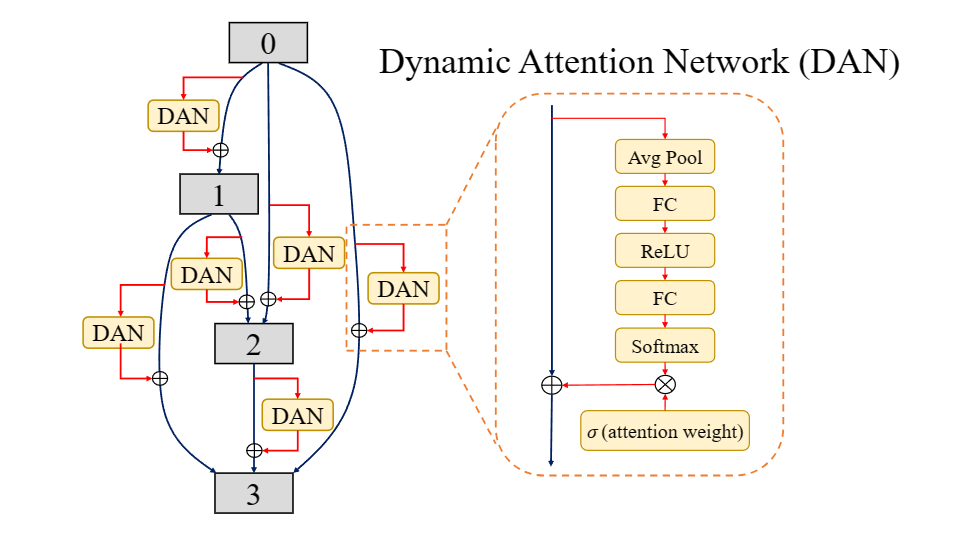

To overcome the bias of the gradient-based search, we make the architecture dynamic during the search. This simple technique allows the gradient-based search to have an exploration effect. For effective exploration, we propose Dynamic Attention Networks (DANs) which change the neural architecture based on the input.

|

|

We propose a new method using deep learning. We improve the performance of the existing deep learning algorithm using attention mechanism. We applied our algorithm to the OFDM radar environment as well as the existing frequency modulated continuous wave(FMCW) radar.

|